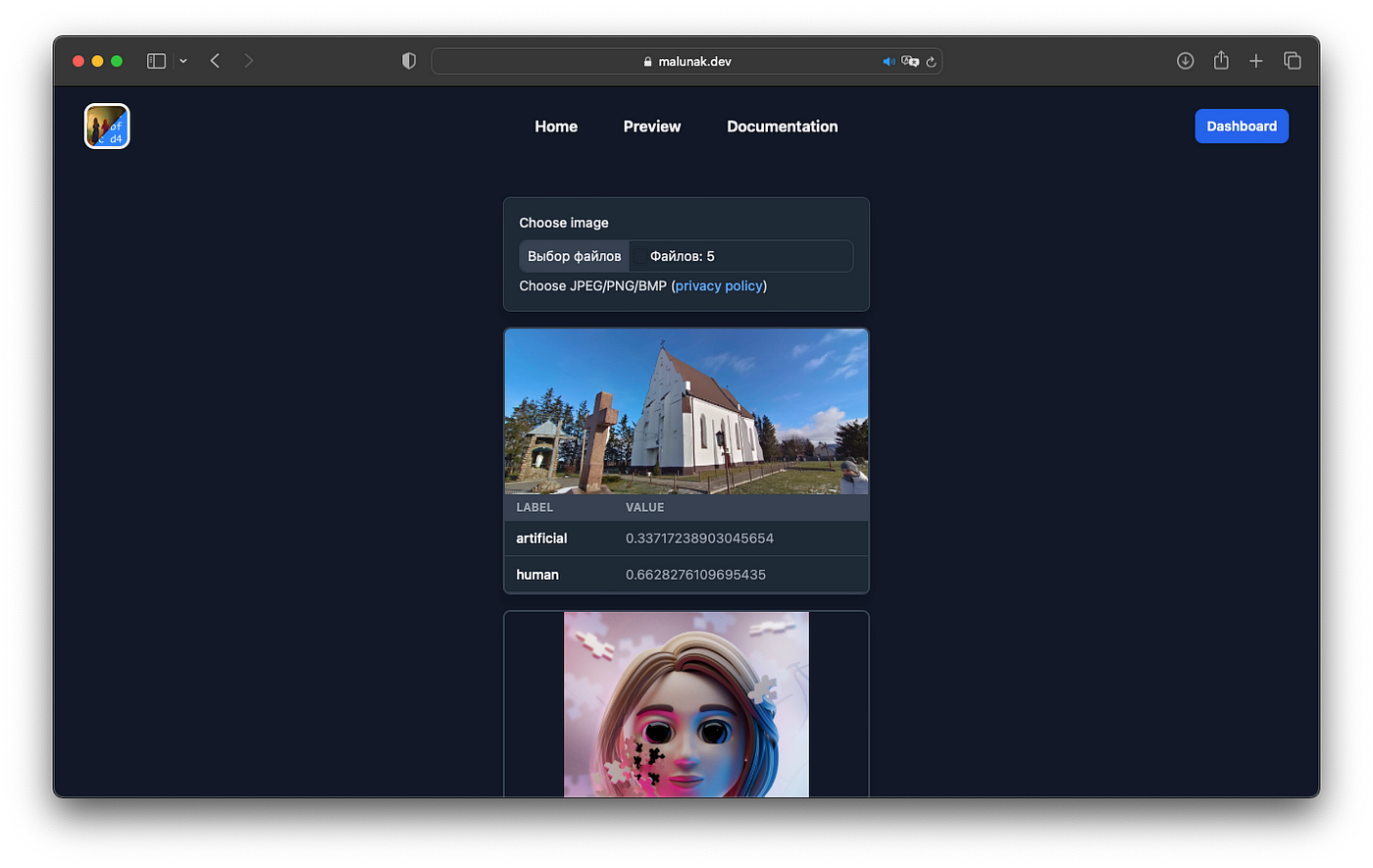

This article is about developing a model for AI Image Detection powering Malunak APIs. Malunak is in alpha at the moment, so there are some limitations with its API. Still, you can register your project and start using it in the developer dashboard. Remember to activate the project before using your credentials.

Relevance

In 2022, many models for generating images from prompts became publicly available (Midjourney, Stable Diffusion, DALL-E). These models were trained on real images, including copyrighted works. Accordingly, the question arises about the authorship and artistic value of these works.

Some platforms have already started litigation with authors of models because they trained models on works licensed by those platforms. Also, some platforms are not ready to take responsibility for the distribution of images created by artificial intelligence, as they are synthesized from other copyrighted and licensed works.

The purpose of this work is to create tools that can determine whether an image was created by humans or generated by artificial intelligence.

Development

Analysis of existing solutions

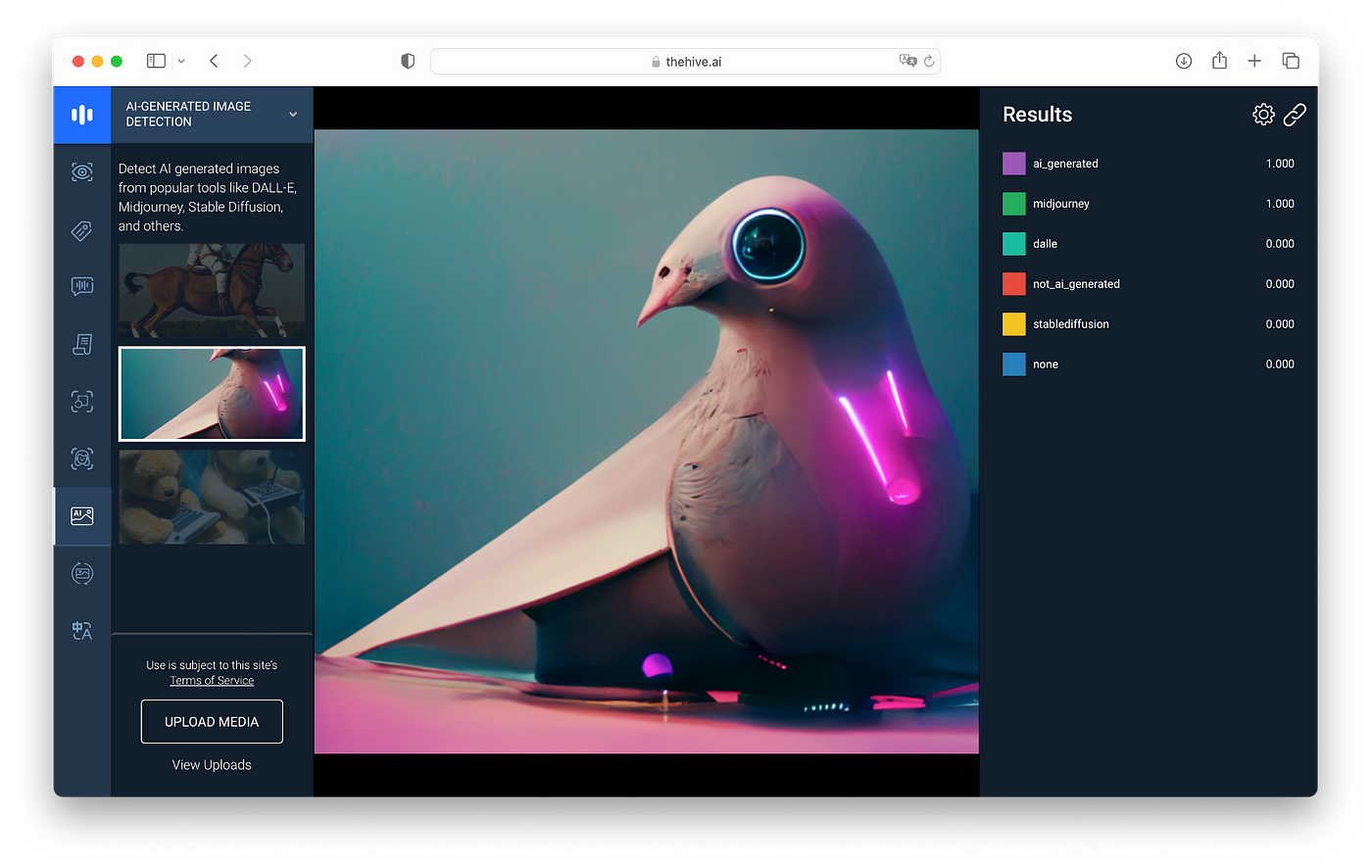

One of the existing solutions is TheHiveAI. This is a proprietary solution that provides an API for classifying images, including the type of model used to generate them. TheHiveAI has been trained on models before Stable Diffusion v2 and sometimes does not perform well on images generated by new versions of the model. There is also a limit on the number of requests.

There is also an open-source solution — AI Image Detector. It is worth noting that the AI Image Detector does not work correctly with images that have noise. The author also stated the false accuracy of the model: the real one is 20% less than the declared one (73% instead of 94%).

Noisy Images

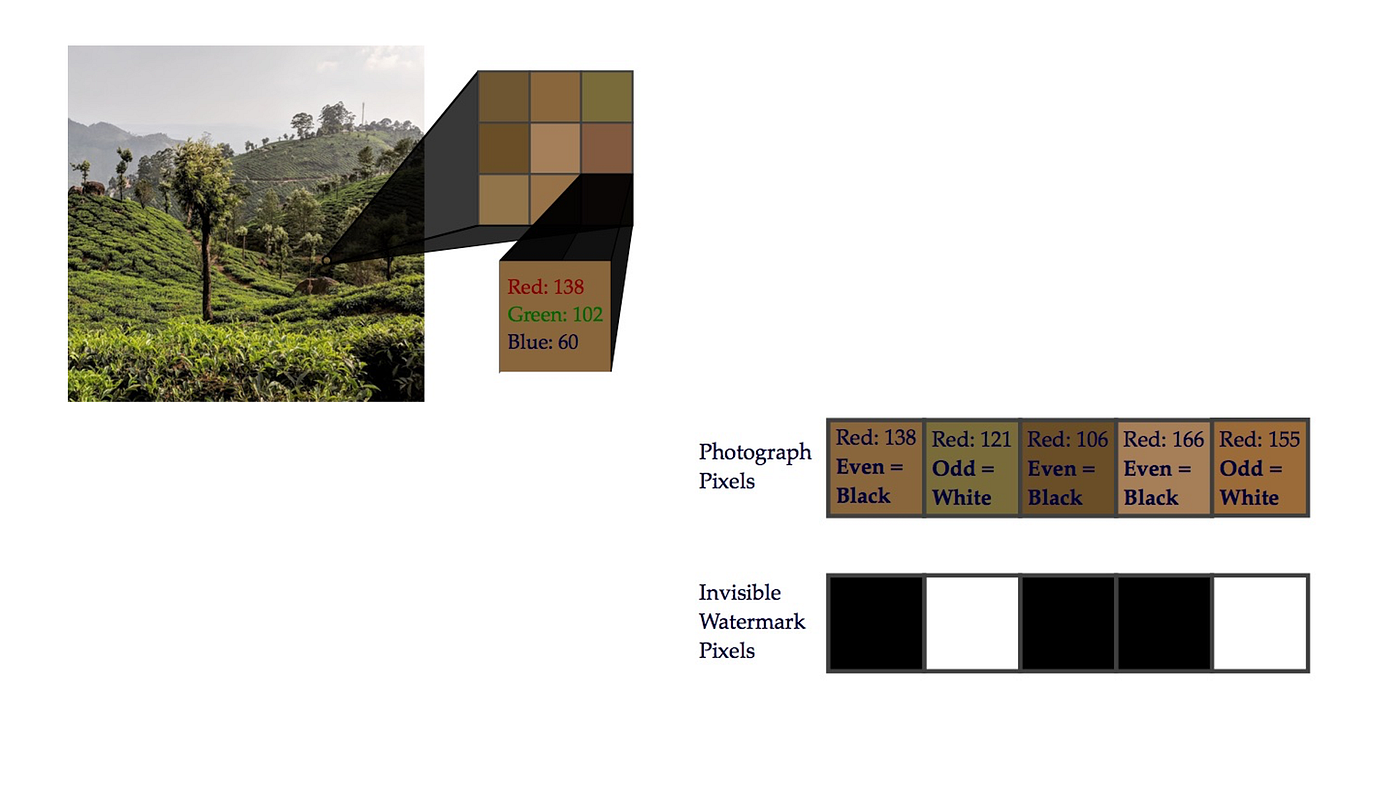

Why is there a problem with images with noise? Stable Diffusion and Midjourney add an invisible watermark to images: the value of each pixel is adjusted to the nearest even or odd value and thus the information is encoded. This affected the AI image detection.

Dataset

To build a dataset, a method similar to the AI Image Detector was used: images were collected from communities on Reddit, as well as other catalogs and services.

New images were also generated based on prompts from Reddit. The fact is that the images posted on Reddit are the “best” works. The “best” works are the works that have the fewest artifacts. Such works are published in posts because the authors of the posts try to publish high-quality works, and also the community moderators remove images that have numerous artifacts. As part of this work, it was necessary to have a lot of data, which will also contain images with artifacts to avoid drawdowns in cases that are obvious to a person.

In total, there were 7236 images in the data set. Split: 75% for training (5427 images), 25% for validation (1809 images). The label division: “artificial” — 3662 images, “human” — 3574 images.

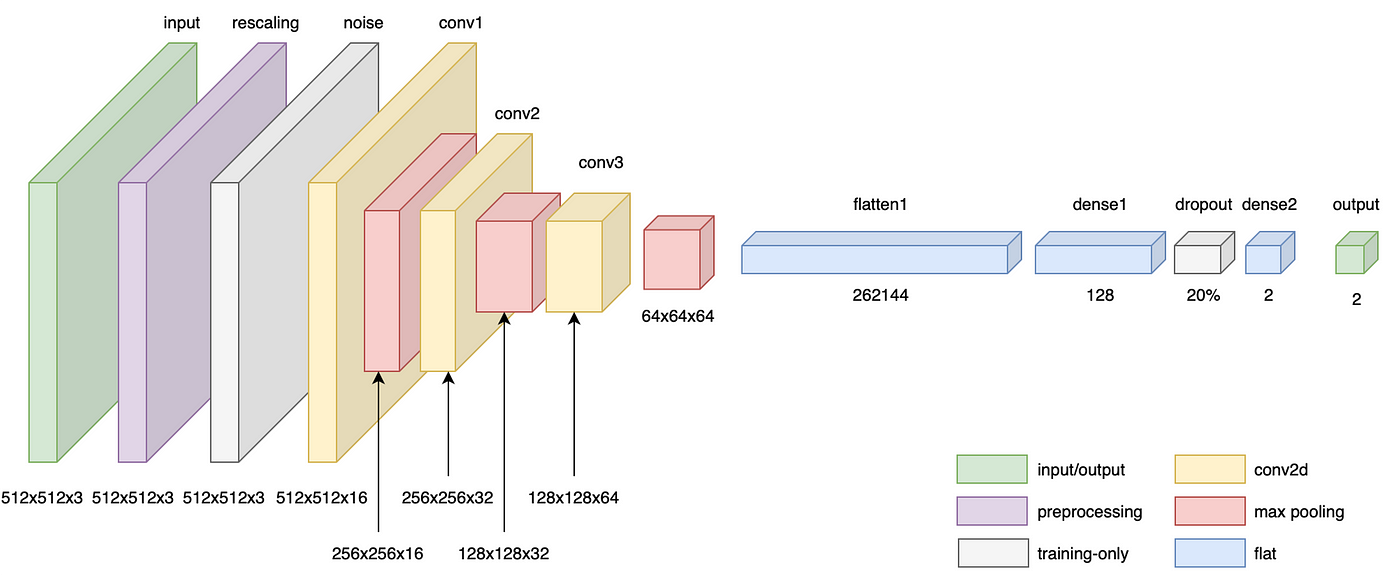

Training

The model is a conventional convolutional neural network. The features: Gaussian noise is applied to all images at the training stage to eliminate the problem of recognizing the origin of images with noise, and there is also a Dropout layer that throws out 20% of neurons before the last layer of the model to improve its quality.

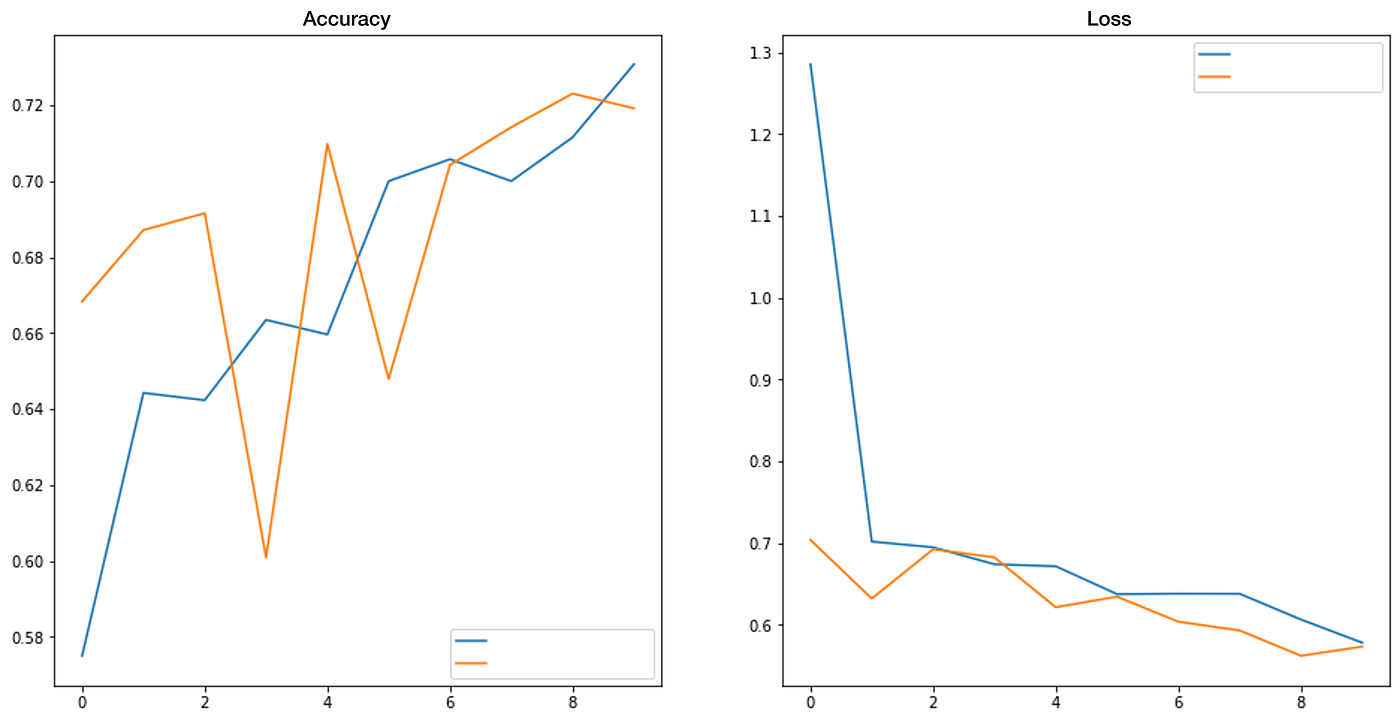

In the following plots, you can see the accuracy and loss for the training (blue) and validation (orange) data. Based on these two graphs, we can conclude that there were no problems with overfitting the model. The accuracy of the model was about 77%.

For further testing within this work, our own model was used with the accuracy of 77% and the smallest losses.

Testing

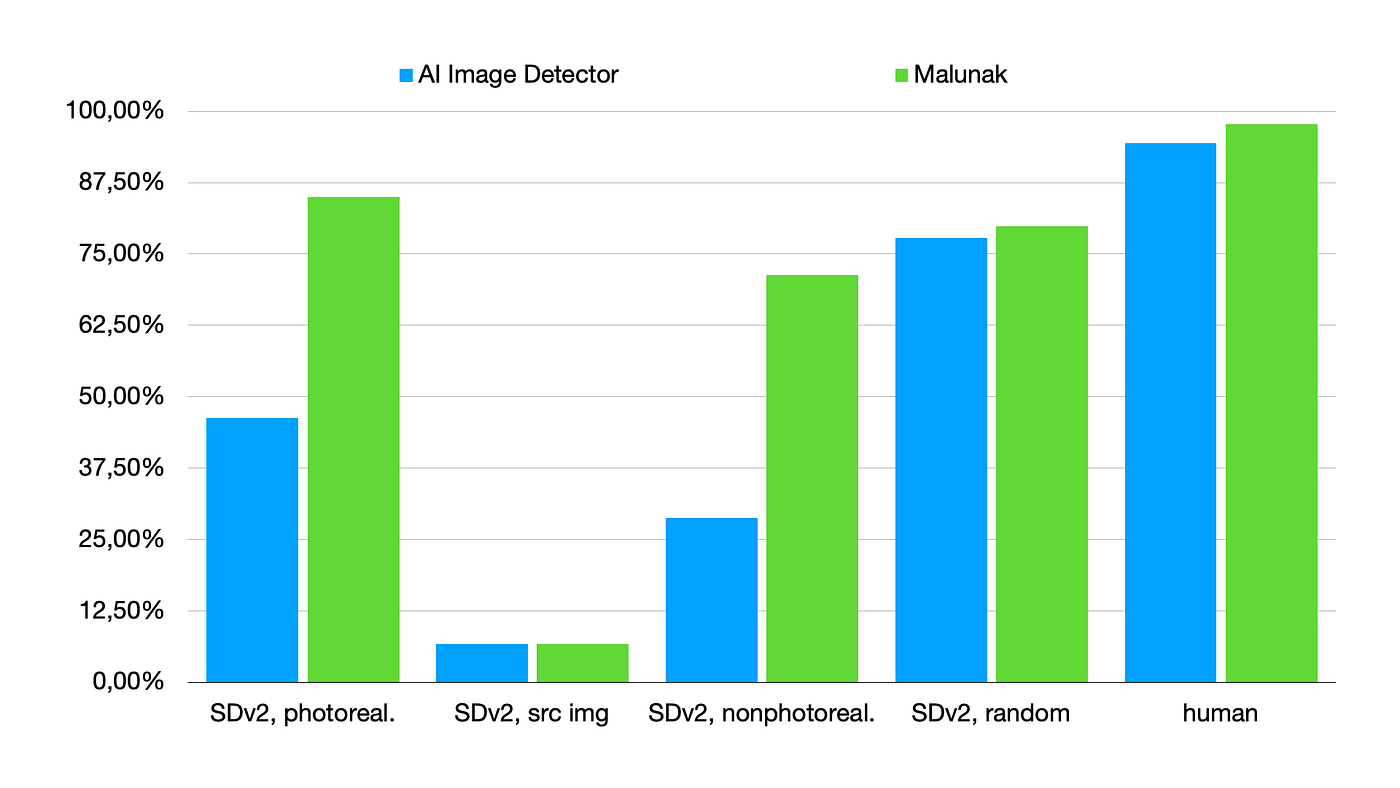

It is worth noting that TheHiveAI was excluded from testing due to the presence of a limit on the number of requests to the API, and the datasets were reduced, as there was a possibility that the AI Image Detector deployment on HuggingFace Spaces would soon be disabled. A set of 5 categories was used.

Recognitions were counted according to the following method: the image label was the label with the highest model prediction value.

You can see the accuracy difference between AI Image Detector and Malunak’s solution. You can see that for all categories, except for the second one, Malunak did better than AI Image Detector.

Results

Existing solutions for the detection of images created by artificial intelligence were studied; developed own software; a model was trained to take into account that noise can be applied to images; tests have been carried out; the accuracy of the model was 77%.

The uniqueness of the work: the known solutions have not worked for noisy images as well as the Malunak does. The model is recommended only for a preliminary assessment of the origin of images with further human decision-making.

We are waiting for you to join us and help with developing a new cloud-based AI solution for AI Image Detection — Malunak. Join us using your GitHub account through the Developer Dashboard and use Malunak API Reference to develop your apps. Remember to activate your projects before working with the API and that Malunak is in the alpha stage right now.